Most of my time i 'm using the

RawTherapee conversion tool to convert from RAW photos produced from my

Nikon D3200 to jpg. RawTherapee is a cross-platform and it is released under the GNU General Public License Version 3 from January 2010. During the years the application attracted many programmers and photographers. It is really a powerful tool, how something it started to bothering me after checked the latest version. First lets see what Lightroom offers to the photographer:

There are some interesting names that needs more explaining, such as how the shadows and highlights bars work or what is clarity, but nothing extreme complicated. Now lets see what RawTherapee offers:

|

Toolbar of RawTherapee for the Exposure settings.

This is the first thing that the user edits. |

It offers an "Auto Levels" that automatic expands the histogram based on the % of clipped pixels. And then there are bars, a lot of bars. Exposure exists also in Lightroom, but then there is the Highlights and the Highlights compression threshold, that does not exist in Light room. The same for the Shadows, but there is not threshold here. Then there is the Lightness, but again no threshold here. Saturation is ok, although i would expect in different tab. Then you also have two (!) tone curves to play. The option that does not exists in rawtherapee is the clarity. It is called local contrast and it is in different tab. Lets see:

|

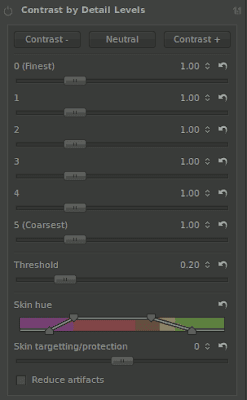

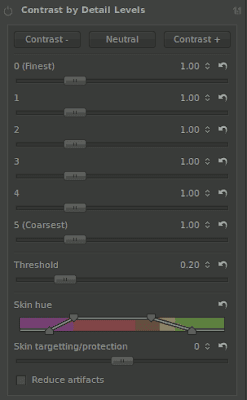

| Options of contrast by detail levels in rawtherapee. |

More options and it seems ok, not so complicated. However, there is a new tab called wavelet levels that it is essentially the same, but with much more options:

|

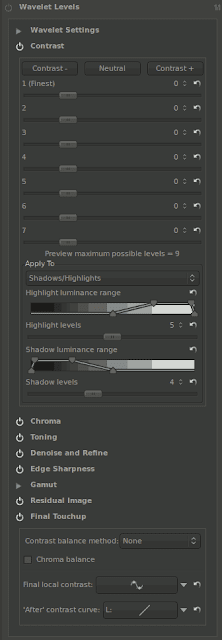

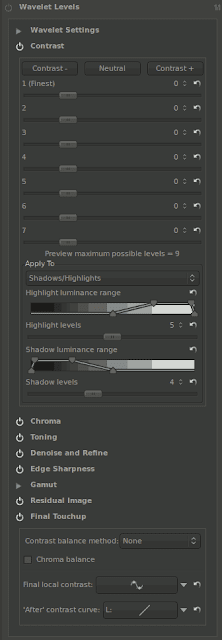

| Wavelet levels RawTherapee. |

Yes there is a lot of

documentation, but the available settings are better suited for the research scientist rather to the simple photographer or user.